Say you want to extract keywords from large piece of text: how would you go about doing it? We set out to build an app that would do just that and include it in the Priceonomics Analysis Engine, our tool for crawling and analyzing web data. That way, if you are crawling a web page, you can easily figure out the important keywords by calling a simple function.

You could start off by looking for the words that appear most frequently in the text. That would give you pretty bad results since the most common words typically include things like “a,” “the,” “is,” etc.

Or you could look look for words that show up much more frequently in the text you’re looking at than they do in a more generic body of texts. If this blog post repeatedly mentions the word “corpus”, and that word is only very rarely used on our blog, that’s a good sign this is an important keyword. The problem with this approach is you need a huge and relevant corpus of text to compare your writing too, and that is expensive unless you are huge company with very particular assets.

Amazon, for example, uses all of the text it has digitized — for its “Search inside!” feature — as its comparison corpus to identify the most “Statistically improbable phrases” keywords in books. Their algorithm is able to list the least probable phrases for just about any book, because it has access to a wide corpus of phrases in many different fields:

For example, most SIPs for a book on taxes are tax related. But because we display SIPs in order of their improbability score, the first SIPs will be on tax topics that this book mentions more often than other tax books. For works of fiction, SIPs tend to be distinctive word combinations that often hint at important plot elements.

Amazon can only do this because its database is so massive and diverse. This database also happens to be proprietary. Which is to say — it’s the right tool for them, and probably not for the Priceonomics Analysis Engine. Even if we did acquire a good enough corpus, we would still need to maintain it, and grow it to our needs. Some of it might need to be labelled by humans for it to be useful to the algorithm, and if we ever wanted to analyze text in a different language, we would need an entirely new corpus.

***

When we were building a keyword extraction tool, we looked for a speedy, lightweight algorithm that could analyze text a priori — it didn’t require any training, and didn’t need any information about the text it was analyzing (like whether it was in English or Spanish). The most common methods didn’t meet these criteria, but we found one that did in “Level statistics of words: Finding keywords in literary texts and symbolic sequences” by Carpena et al. in 2009. Since it only takes a couple of hours for us to write one of these applications on our engine, we figured we’d try it out.

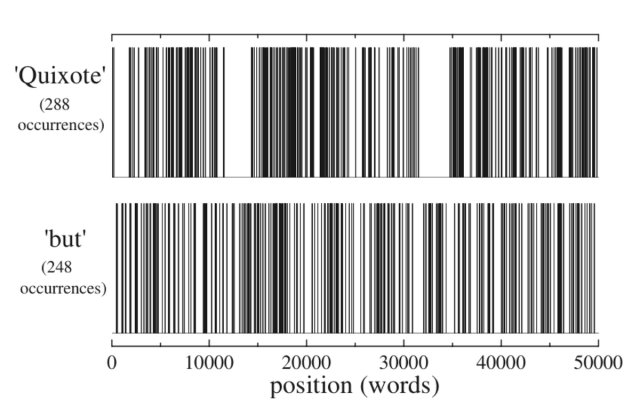

The essence of the approach is this: in a given body of text, unimportant words are randomly distributed, while very important words tend to show up in clusters and non-random patterns. To measure that clustering, the algorithm borrows from quantum mechanics, so the analysis is grounded in an analogy to energy spectra. We convert the text into an array of words, and then construct a “spectrum” for each word, showing the integer position of each occurrence of the word in the array.

Source: Carpena et al.

The spectra above take the first 50,000 words of Don Quixote as source text. Although the words Quixote (more relevant) and but (less relevant) appear with about the same frequency, they have very different patterns of distribution.

By analyzing the spacing of these spectra, we’re able to derive a clustering metric. That clustering metric is combined with the word’s raw frequency, to find the final relevance metric. Then we’re able to rank words by relevance: the top words in this ranking are likely to be the best keywords.

This algorithm has some drawbacks. It doesn’t perform as well with very short texts as it does with longer ones: if your source text is only a couple hundred words long, even an important word might only show up two or three times, making for a pretty sparse and not very meaningful spectrum. Also, in some ways, it’s less flexible than other methods: if you don’t like the keywords the algorithm gives you, there aren’t any magic dials to fiddle with and adjust the sensitivity. If you had a corpus-trained algorithm, you’d be able to narrow or widen the scope by changing the corpus and retraining the algorithm. You can’t do that with our algorithm because there’s no corpus.

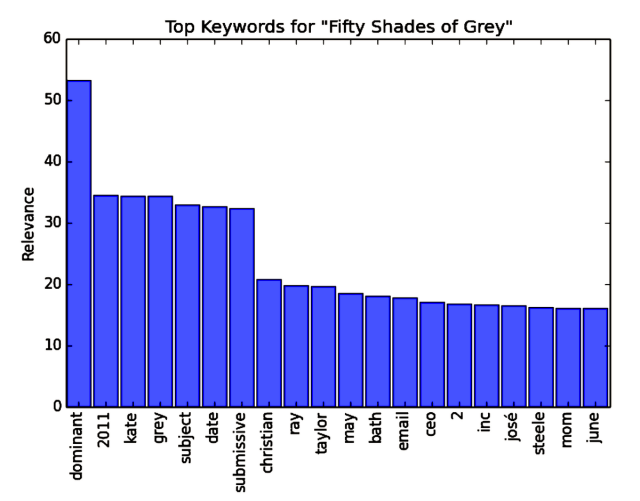

But for bare-bones keyword generation, it does pretty darn well. Here’s an example:

50 Shades of Grey, summarized by the Priceonomics Analysis Engine

We have implemented this automatic keyword analysis in a new app called Keywords for the Priceonomics Analysis Engine. You can use it just by sending in raw text content, and we’ll return a list of all keywords and their associated relevance according to this analysis.

This post was written by Brendan Wood and Rosie Cima. For updates on the API and Analysis Engine, join our developer email list.