“Alexander joins forces with James Madison and John Jay to write a series of essays defending the new United States Constitution, entitled The Federalist Papers…In the end, they wrote eighty-five essays, in the span of six months. John Jay got sick after writing five. James Madison wrote twenty-nine. Hamilton wrote the other fifty-one!”

Aaron Burr in Lin-Manuel Miranda’s Hamilton.

* * *

Good guess, Mr. Burr.

Back in 1788, when Alexander Hamilton was churning out Federalist Papers like he was running out of time, he and his two co-authors, James Madison and John Jay, published their eighty-five arguments in support of the U.S. Constitution under a shared pen name, “Publius.”

Who was this Publius?

Years later, after the Constitution was ratified and the Federalist Papers came to be seen as more than just long-form political advertisements, but among the new nation’s most treasured founding documents, people began to wonder. Which founding father wrote which of these papers? Burr’s operatic tally notwithstanding, this was not public knowledge at the time and Burr famously shot and killed Hamilton before the question could be settled.

Though some of the essays were attributed and claimed without controversy, the debate focused on a dozen. Madison claimed the twelve were his, Hamilton’s political allies insisted otherwise, and because this was one of many things that the two camps disagreed on, the case of the disputed Federalist Papers became a partisan, political controversy.

It also became an enduring mystery about the political origins of our country that lasted for nearly 175 years.

When the case was finally cracked in 1962, it wasn’t at the hands of historians or political scholars, but of two mathematicians. Armed with a controversial statistical theory and tens of thousands of scrap pieces of paper (this was before modern computing), Frederick Mosteller and David Wallace spent three years combing over the written works of Madison and Hamilton to identify subtle patterns of word choice—linguistic fingerprints that could be used to trace the origins of the disputed papers back to their original author.

When Mosteller and Wallace published their results, they not only solved a mystery as old as the Republic, they also pioneered a new mathematical way of analyzing the written word. Suddenly, statisticians and computer scientists could play the role of the textual detective.

In the decades since, this field of statistical authorship attribution has been used to analyze the provenance of religious texts, enhance computer security, deliver asylum seekers from repressive regimes, and uncover the pseudonymous work of one of the world’s most popular authors.

But it all started with the obscure Roman pseudonym, Publius.

Who is Publius?

Back in the 18th century, nom de plume were all the rage in political writing.

Adopting a fake name was, in part, an act of self-protection. When Hamilton, Madison, and Jay were writing their Federalist Papers in order to sell the U.S. Constitution to the voters of New York, there was no constitutional protection of free speech and press (that was kind of the whole point).

But there was another reason to take on a pen name. Not everyone was sold on the United States Constitution in 1788, and the Federalist Papers were a 180,000 word political ad. If you were a citizen of the newly established United States and you were still on the fence about this whole Constitution thing, whom would you find more persuasive—a couple of the partisans who had helped write the document or a humble citizen named “Publius” whose only stated wish was to “not disgrace the cause of truth”?

Unfortunately, the Publius moniker proved to be a problem when, years later, questions surfaced about who actually wrote each paper. Seventy-three were allotted to Madison and Hamilton without much controversy, and everyone seemed to agree that John Jay had only written five.

But that left twelve unclaimed.

By this time, Hamilton was most closely associated with his Federalist Party and its platform of political and financial centralization. Madison, on the other hand, regarded Hamilton’s national bank plan as (according to a certain Broadway hit) “nothing less than government control” and was solidly in the Democratic-Republican camp. A heated ideological turf war broke out over who could claim ownership of some of the nation’s most important political treatises.

Who wrote the disputed twelve papers? Alexander Hamilton was shot by Aaron Burr in 1804 and took the secret with him to his grave. Illustration by J. Mund.

That battle lasted for decades. In the years immediately following Hamilton’s death at the pistol of Aaron Burr, his allies published an edition of The Federalist that gave full credit of the disputed twelve to their fallen compatriot. These claims were rebuffed by the allies of Madison and, after his death in 1836, new editions of the papers were rolled out, in which he got the contested attribution. The back-and-forth carried on amongst politicians and historians for decades.

“This alternating sequence of belief and disbelief that marks the controversy,” wrote the historian Douglass Adair, “is directly correlated with the see-saw of prestige between these two interpreters of the Constitution, depending upon whether agrarian or capitalistic interests were politically dominant in the country.”

Still, these were different men with different ideologies. Why was it so hard to tell Madison and Hamilton apart?

In fact, both in style and substance, Hamilton and Madison’s essays in the Federalist Papers are nearly indistinguishable. Both adhered to the turgid, clause-happy style of the late 18th century. Take this doozy of a sentence from Madison’s Federalist No. 10:

“It will be found, indeed, on a candid review of our situation, that some of the distresses under which we labor have been erroneously charged on the operation of our governments; but it will be found, at the same time, that other causes will not alone account for many of our heaviest misfortunes; and, particularly, for that prevailing and increasing distrust of public engagements, and alarm for private rights, which are echoed from one end of the continent to the other.”

As the mathematicians Mosteller and Wallace showed nearly two centuries later, the average sentence length of both founding fathers clocked in at roughly 35 words. (By comparison, the average sentence length of this article is about 24 words.)

As for substance, the Federalist Papers were meant to present a comprehensive case for the new Constitution. Whatever their private reservations and whatever changes of opinion they would later make (and they made many), under Publius, Hamilton and Madison presented a unified front.

And so, with no obvious differences between the writers, the controversy appeared intractable and the mystery unsolvable. There was simply no way to distinguish Hamilton’s writing from Madison’s in an objective, quantifiable way.

That is, not until Mosteller and Wallace came along in 1959.

What’s in a Conjunction?

Sometime in the late 1950s, the historian Douglass Adair noticed something funny about the writing of Alexander Hamilton and James Madison.

Adair had spent much of his career investigating the mystery of the twelve Federalist Papers and he had finally found a tell: though Hamilton tended to use the word “while,” Madison was a consistent “whilst” man.

As far as forensic evidence goes, this was more smudged partial fingerprint than DNA. Some of the unattributed essays didn’t include either “while” or “whilst,” so it wasn’t a useful discriminator in every case. And even when the occasional “whilst” did pop up, its mere appearance didn’t rule out Hamiltonian authorship entirely. Both authors mixed up their conjunctions occasionally.

Still, Adair felt that he was onto something, so he sent a letter to Frederick Mosteller.

Mosteller was a professor at Harvard and on his way to becoming one of the country’s most preeminent statisticians. Back in the 1940s, Mosteller had done his own study of the Federalist question. Teaming up with the political scientist Frederick Williams, he had painstakingly measured the average sentence length in Hamilton and Madison’s known essays, hoping to find a consistent difference which could then be used to ID the disputed texts.

But they had come up with nothing: the founding fathers were uncannily similar. Both wrote with average sentence lengths of 35 words. There was no difference to exploit.

Mosteller and Williams did not invent the idea of analyzing texts in this numerical way.

As far back as 1851, the British mathematician Augustus De Morgan wondered if St. Paul had in fact written all thirteen of the Pauline epistles in the New Testament and whether one could tell by measuring the average sentence length in each book. He never bothered to try, but he still predicted what was to come.

“[One] of these days spurious writings will be detected by this method,” he wrote in a letter to a friend. “Mind, I told you so.”

James Madison: Fourth President, co-author of the Federalist Papers, not the subject of a hit Broadway musical.

A few decades later, the American physicist Thomas Corwin Mendenhall actually gave the method a go. Rather than use the sentence length method, Mendenhall believed that authors distinguished themselves based on how frequently they used big and small words. He used the method to compare various passages of Charles Dickens’ Oliver Twist and William Thackeray’s Vanity Fair. But average word length varied from passage to passage, and there was no obvious difference between the two authors.

Not all of the early attempts at statistical authorship attribution were failures. In 1939, the British statistician, George Udny Yule, put De Morgan’s sentence length metric to the test and found that patterns in The Imitation of Christ more closely resembled the writing of the 15th century monk, Thomas à Kempis, than they did those of Jean Gerson, to whom it was commonly attributed.

But in the 1940s, Mosteller had tried both sentence and word length on the disputed Federalist essays, but to no avail. Writing about the experience later, Mosteller said that he used his study with Frederick Williams as a teachable moment to illustrate that failure in academic research is unavoidable.

That is when Adair wrote to Mosteller, encouraging him to get back into the textual forensics game.

The key, Adair insisted, wasn’t to focus on the lengths of sentences and words, but on the individual words themselves. If Madison and Hamilton differed so dramatically in their use of “while” and “whilst,” maybe there were others linguistic tells waiting to be discovered.

Weighing the Evidence

Prompted by Adair, Mosteller decided to take another crack at the Federalist Papers question in the summer of 1959.

This time, he was joined by a statistician from the University of Chicago, David Wallace. For Mosteller and Wallace, the study provided the opportunity to answer two questions.

First, if all went well, they might just solve one of the longest lasting mysteries about the founding fathers.

But second, and of equal importance to the two mathematicians, the project gave them an opportunity to take a promising, if controversial, approach to statistical inference out for a spin.

Since the early 20th century, the field of statistics had been oriented around a certain view of probability. In a “frequentist” experiment, as this theoretical approach came to be known, one starts with an initial hypothesis—say, that you have an undoctored coin with a 50% chance of coming up heads—and then you flip the coin to see if your results are consistent with that world view. If they are, then you stick to your initial assumption. If they are sufficiently inconsistent, then the statistician rejects his initial hypothesis and adopts a new one: the coin is probably phony.

Rather than start off with a fixed idea of how the world is and then ask how consistent the evidence is with that worldview, the Bayesian approach starts off with evidence and then look around for a worldview that matches. The results of an experiment do not reject or accept a given hypothesis, they provide relative probabilities that something is one way or another—say, the odds that a particular essay was written by Alexander Hamilton or James Madison.

In the first half of the 20th century, the frequentist view dominated statistics, but around the time that Douglass Adair made his “while”/”whilst” discovery, Bayes was making a gradual comeback over the strong opposition from the old guard.

“Though much has been written recently about statistical methods using Bayes’ theorem, we are not aware of any large-scale analyses of data that have been publicly presented,” wrote Mosteller and Wallace.

Here, at last, was an opportunity.

“By,” “To,” “From,” “Rooster”

In this article, the word “to” appears 98 times—or at a rate of about 27 “to”s per 1,000 words. It is easy to figure this out because the article was written on a computer in 2016.

But counting the frequency of different words in the Federalist Papers wasn’t quite so easy in 1959.

“The words in an article were typed one word per line on a long paper tape,” Mosteller recounts. “Then with scissors the tape was cut into slips, one word per slip. These were then alphabetized by hand. We had many helpers with this.”

The process took months. Every once in awhile, someone would open a door too quickly, sending a gust of air through the room and setting everyone back a few days.

The IBM 7090, which Mosteller and Wallace used to statistically analyze the disputed Federalist Papers, 3,000 words at a time. Image: NASA Ames Research Center.

Once all the words were printed out and sorted, the Wallace and Mosteller team set out to find “discriminators.” These are words that Madison may have used much more frequently than Hamilton, or vice versa. The best candidates were “non-contextual” words—conjunctions, prepositions, articles. These are words that people use all the time and more or less equally from one context to the next, regardless of the topic.

“These function words are much more stable than content words and, for the most part, they are also very frequent, which means you get lots of data,” explains Patrick Juola, a professor of computer science at Duquesne University and an expert in text analysis.

“That’s opposed to the word ‘rooster,’ which I believe occurs roughly one word out of every million words in written English,” he says. “If I were waiting for you to say ‘rooster’ so that I could identity something that you wrote, it could be months.”

Some of these non-contextual discriminators discriminated better than others.

For example, both Hamilton and Madison used the word “from” at roughly the same rate, while Madison was almost twice as likely to use “by.” So Wallace and Mosteller tossed out “from,” but kept “by.” After a couple years of work, the pair had identified thirty words that consistently distinguished the two founding fathers.

The next step was to build the statistical model and test the method out.

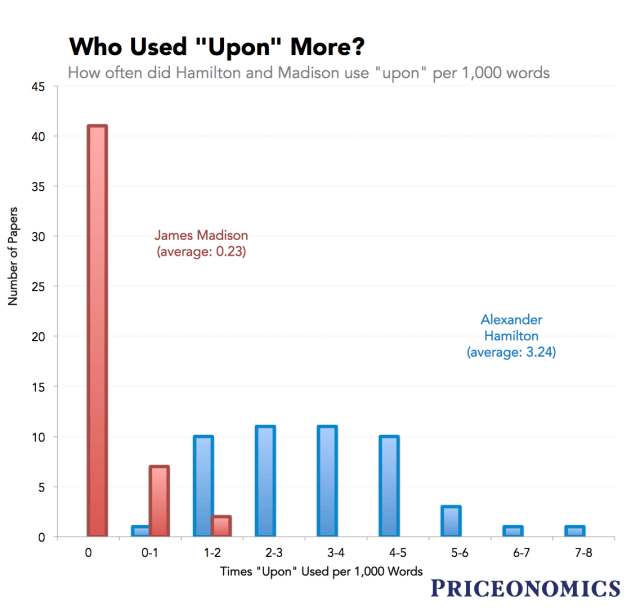

Plugging Bayes-inspired code into an IBM 7090 and processing the text 3,000 words at a time (any more and the machine would “go crazy,” wrote Mosteller), the model took the rate of use of a particular word in one of the contested essays (say, how often the word “upon” popped up) and then compared that rate to those found in the known works of Hamilton and Madison. In the majority of the 50 Madison essays measured, that founding father did not use the word at all. The 49 Hamilton papers clocked in at an average rate of 3.24 “upon”s per 1,000 words. In other words, a low “upon” count in a disputed paper would boost the odds that Madison was the author, while a high count tipped the scales towards Hamilton.

This information was then used to update the odds assigned to either author. Mosteller and Wallace would then feed in another word, which would be used to update the odds again.

How reliable were these odds? To find out, the two statisticians set the model loose on 22 essays which were known to have been written by Hamilton and Madison. The method accurately predicted the authorship of each one. Even in its most “inconclusive” result, an assessment of a work by Hamilton, suggested that he was 20 times more likely to have written the essay. The model’s best performance, in contrast, accurately gave the edge to Madison with odds of about 160 billion to 1.

James Madison didn’t use the word “upon” much. Incidentally, neither did the author of the disputed papers. Data: Mosteller and Wallace, who used 99 papers for these data, 50 from Madison and 49 from Hamilton.

Finally, it was time to test the dozen disputed papers. With apologies to fans of Lin-Manuel Miranda, the results all pointed in the same direction: James Madison was almost certainly the author of all twelve.

Of course, even with odds as high as 12.6 million-to-1, in the case of one essay, there was still room for doubt. As Mosteller himself pointed out, “the odds can never be greater than the odds against an outrageous event.” What if Jefferson, whose style was not measured by the model, had secretly written the unattributed Federalist papers? What if the stray bits of paper had been miscounted by a sleep-deprived graduate student in a way to skew the results just so? What if the authors themselves were perpetuating a hoax? All of these outcomes are unlikely, but they are all surely more likely than 12.6 million-to-1.

Writing about the study in September of 1962, a journalist from Time seemed to agree.

The statistical results, he wrote, “may be quite satisfactory to an IBM 7090, but will still leave any professional writer with a nagging question: What if Hamilton really wrote the papers and Madison later edited them, dourly scratching out upon whenever he came upon it?”

From Hamilton to Harry Potter

Mosteller and Wallace put a 174 year old mystery to rest, but they also pioneered the use of applied Bayesian statistics and launched a new field of text analysis.

Though there had been previous attempts to statistically analyze text, none had been so academically rigorous, mathematically complex, or exhaustive. In the years since, computationally-assisted statistical authorship attribution has become a cottage industry.

Just last week, Christopher Marlowe received long-overdue credit as co-writer of three “Shakespeare” plays. The text analysis that verified this long-suspected collaboration has its roots in the work of Mosteller and Wallace.

Since 1962, similar treatment has been given to Mormon scripture, to a work falsely attributed to Mark Twain, to one of L. Frank Baum’s Oz books, to the radio speeches of Ronald Reagan, and a few more times to the Federalist Papers. (Each of these subsequent attempts has agreed with Mosteller and Wallace).

Then there is the case of J.K. Rowling.

In July of 2013, a freelance reporter from the Sunday Times newspaper in England decided to follow up on a rumor that a new crime novel, Cuckoo’s Calling, was in fact the work of the literary powerhouse behind the Harry Potter series.

The journalist reached out to Patrick Juola from Duquesne University. Juola is well known in the world of text forensics and is the creator of the Java Graphical Authorship Attribution Program (JGAAP for short). JGAAP is improvement upon the Bayesian model of Mosteller and Wallace in the same way that a Tesla is an improvement upon the Model T.

Rather than hand-pick the best discriminators, as Mosteller and Wallace painstakingly did in their Federalist Papers study, JGAAP selects these linguist fingerprints automatically. It also doesn’t just restrict its analysis to word frequency, but tries to distinguish one author from another based on punctuation, the use of different word and letter combinations, and a host of other metrics.

And while Mosteller and Wallace took years to complete their study, Juola was able to turn around his J.K. Rowling analysis in a matter of hours.

At the request of the Sunday Times, Juola used JGAAP to compare Rowling and three other female British crime novelists to Cuckoo’s Calling’s alleged author, an unknown military veteran named “Robert Galbraith,” along four different metrics.

“The syntax was Rowling-like, the vocabulary was Rowling-like, the complexity was Rowling-like, the punctuation was Rowling-like,” says Juola. “Which is more plausible, that [Galbraith] that similar to Rowling—or that ‘he’ is just Rowling?”

According to the model, the chances that any author picked at random would match so consistently with Galbraith without actually being Galbraith were roughly 1-in-16. While it wasn’t impossible that Rowling was not the author of Cuckoo’s Calling, it certainly looked likely. In any case, the day after the Times published the article, Rowling fessed up.

J.K. Rowling, a.k.a. Robert Galbraith. Image: Daniel Ogren.

According to Juola, the result came as a vindication—proof that this kind of super-charged statistical text analysis is “not just black magic.” But these days he’s working to make the program more accurate.

“One chance in 16? I don’t want somebody to call me on a murder case,” he says.

But if not murder, then perhaps immigration.

A few years ago, Juola took a call from an attorney representing someone applying for asylum status. This person, who Juola declined to describe in any detail in order to protect that person’s privacy, was a journalist and had written a series of newspaper articles critical of his or her home country’s repressive government. Though these articles were written anonymously, the journalist was now concerned that the government had learned of their true authorship.

The journalist now needed to prove to the United States Customs and Immigration Service that he or she had written the anonymous articles and thus deserved of political asylum.

Juola fed the journalist’s published works into JGAAP along with the anonymous articles and the work of five other writers. As in the Rowling case, the results were suggestive, if not conclusive. JGAAP put the odds somewhere between a 2.78% and 16.7% that the articles had not been written by the journalist.

As Juola writes, these odds were evidently good enough to clear the “‘balance of probabilities’ burden of proof in a civil case.” The journalist was granted asylum.

Using statistical analysis to rescue a writer from political repression and score a win for freedom of expression?

No doubt both Alexander Hamilton and James Madison would have been proud.

Our next article asks whether Obamacare could learn a thing or two from doggie health insurance. To get notified when we post it → join our email list.

![]()

Announcement: The Priceonomics Content Marketing Conference is tomorrow in San Francisco. Join speakers from Andreessen Horowitz, Slack, Thumbtack, Reddit, Medium Priceonomics and more.

Can’t make it to San Francisco? There’s also a remote streaming option. Get your ticket here.