This post is adapted from the blog of Datafiniti, a Priceonomics Data Studio customer. Does your company have interesting data? Become a Priceonomics customer.

***

The internet is full of people giving their opinion on things. From blogs to forums to social media, the internet is a tool that empowers people to share what they think. Much of the time, these posts are not particularly useful (and sometimes even harmful), but for e-commerce sites, user reviews have been revolutionary.

Right now, there are millions of products available to purchase online. Despite never seeing the product or knowing the specific seller, you can make a well-informed decision before buying just by reading the experiences of other people who already purchased them. Academic evidence agrees. Studies show that reviews matter for customer decision making.

But not all reviews are created equal. Some are thorough and provide details on a specific product feature, while others are vague and unintelligible gibberish. Research shows users put a higher value on well-written reviews. Websites like Amazon take this into account by letting you rate whether a review is helpful or not.

Reading through so many reviews ourselves got us thinking, is the quality of writing (spelling, grammar, etc.) markedly different between positive and negative reviews?

We analyzed data from Priceonomics customer Datafiniti, a data company that maintains a database of online products and their reviews. To answer our question, we compiled 100,000 reviews from thousands of different products. To make sure our data inputs were standardized, we specifically used reviews that had both a star rating (to help us determine if a review was positive or negative) and a written review. On this data, we completed a series of analyses that assessed three aspects of writing quality:

- Length of review

- Spelling errors

- Improper use of grammar

According to our data, negative reviews have a higher rate of misspelled words and a higher rate of incorrectly used apostrophes. They tend to be longer and have more details as well. Five-star reviews typically are shorter and often don’t include punctuation. Across the board, reviewers make a lot of spelling and grammar mistakes – only 61% of reviews passed all our quality checks.

From our findings, we can say that when people are writing negative reviews, they create longer and more error-filled prose than those who are sharing positive reviews.

***

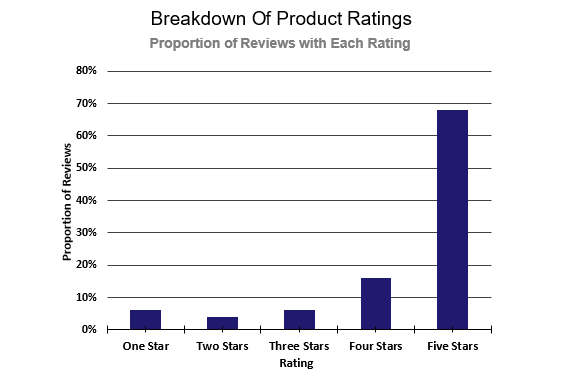

Before we dive into the content of the reviews, let’s understand the distribution of star ratings (ranging from one to five-stars) across products. The following bar chart shows what proportion of our records fall into each rating.

Data source: Datafiniti

This shows us that, by a wide margin, five-star ratings are the most common. Understanding this will help us make sense of overall trends and make comparisons as we get into more specific details.

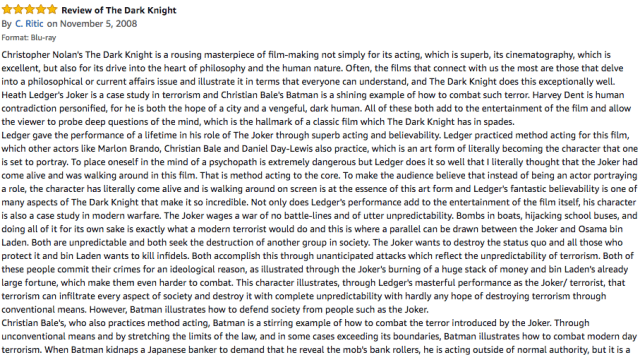

Next, we want to evaluate review length. This is an area of high variability in our data. Some product reviews are extremely concise (several qualify as shortest with only one-word), while others could meet the length requirement for a high school essay (one user provided a very thoughtful 1028 word analysis of “The Dark Knight”).

Source: Amazon.com

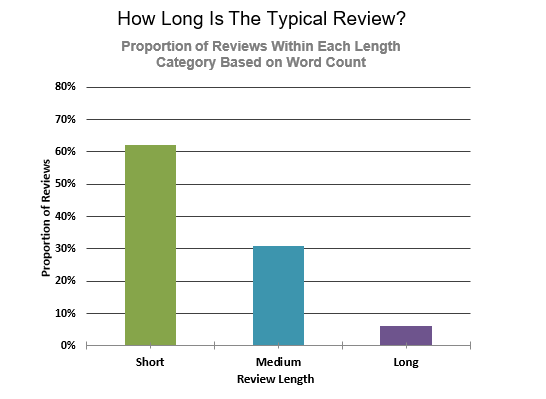

To make visualizing this a bit easier, we will first group our product reviews into three buckets according to length. 1) Short: 1 – 25 words, 2) medium: 26 – 100, and 3) long: 101 or more words. Below is a chart showing the distribution of our reviews across these three groups.

Data source: Datafiniti

Most product reviews are under 26 words. While you can give some helpful feedback in a short blurb, it’s difficult to see how valuable short reviews can be. The extreme end of the spectrum here is one-word reviews. Approximately 1% of our data is made up of single word comments.

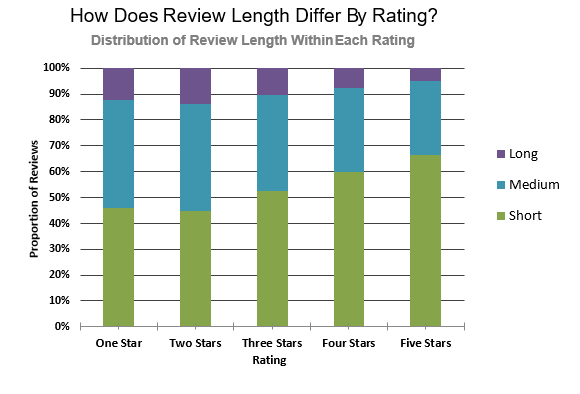

Now, how does review length correlate with the product rating? Below we’ve broken down for each star rating (one through five-stars) the proportion of product reviews that are classified as each length: short, medium, or long.

Data source: Datafiniti

Five-star reviews have the highest percentage of short reviews, while one-star reviews have the highest proportion of long reviews. There’s an apparent trend for more negative reviews to be longer.

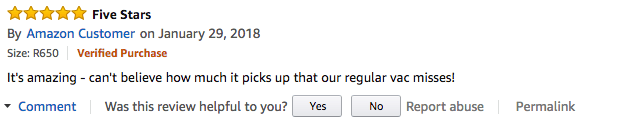

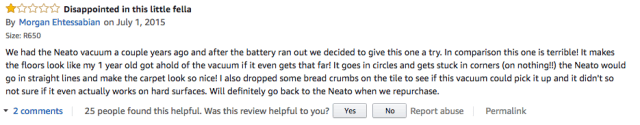

This is easy to rationalize. When you have a bad product experience, there is typically a specific thing that went wrong. For example, the product could have arrived broken, it didn’t work as intended, or it was just bad quality. On the flip side, if a product works as intended and you’re happy, but there isn’t one specific thing that made it work. Below we’ve provided two examples of the same product, a new Roomba vacuum, one happy and the other disappointed.

Source: Amazon.com

***

The next measure on our rubric of writing quality is spelling. Using a spell checker, we can flag all misspellings contained in our review text.

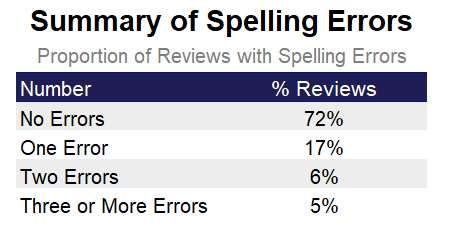

Before evaluating differences between positive and negative reviews, we want to get a sense of spelling aptitude in the overall dataset. The following table shows what proportion of our product reviews contain spelling errors and how many.

Data source: Datafiniti

It turns out that product reviewers are not so bad at spelling. 72% of reviews have no errors (though perhaps spell check has something to do with this). The rate of errors slowly tapers off with only 5% of reviews containing three or more errors.

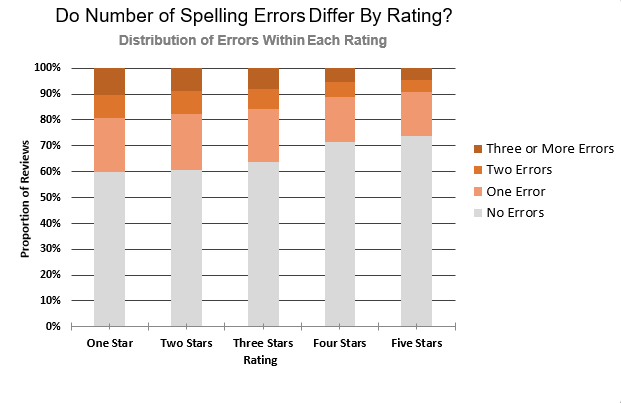

Next, we will take the same basic analysis and see if there are any trends by star rating. In the bar graph below, for each score, we plot the proportion of product reviews with at least one spelling error.

Data source: Datafiniti

We can see a trend where more negative reviews have a higher number of errors; it’s likely that this is related to the fact that negative reviews are longer. With a longer review, there are more opportunities to make spelling mistakes.

To adjust our analysis to consider word count, we will now look at the average rate of errors. Below a table summarizes the average number of errors per word for each rating group (i.e., the average number of words between spelling errors).

In our table, a positive review indicates that errors are less frequent. A five-star review experiences one spelling error 1.6% of the time versus 2.0% of the time for a one-star review. The difference between high and low ratings is, however, smaller than our previous analysis suggested before controlling for review length. We actually see a slightly different trend here overall; errors occur most frequently in three-star reviews, about once every 45 words.

***

Our final category of writing assessment is grammar. In our analyses, we will focus on proper use of apostrophes in contractions as well as general punctuation use.

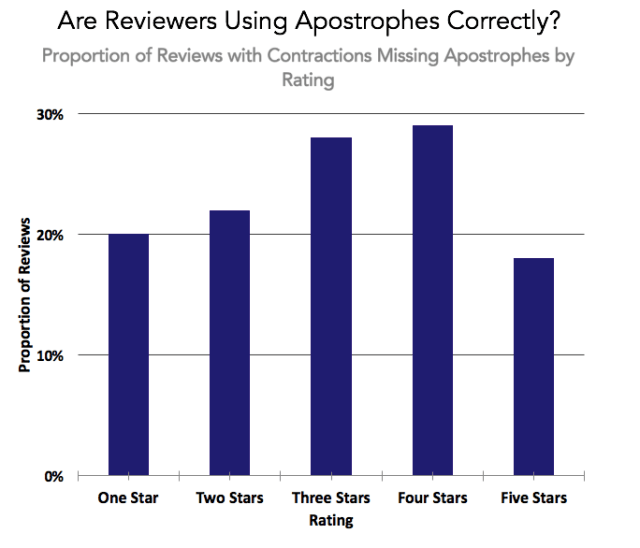

To see if reviewers are using punctuation correctly, we created a specific flag for contractions that correctly use an apostrophe and those that do not. For example, we can tell whether someone wrote “cant” versus “can’t.”

In the following bar graph, we show the percentage of reviewers that make at least one apostrophe related error by star rating. For this specific analysis, we only considered product reviews that have a flag for correct or incorrect contraction use (about 14% of reviews). If someone did not use a contraction at all (most reviews), we did not include their data.

Data source: Datafiniti

Again we see that five-star reviews have the lowest errors rate with 18% of reviews containing an error. One-star reviews are slightly higher but close with a 20% error rate. In this case, four-star reviews actually have the highest rate of apostrophe related errors at 29%.

Our final punctuation related analysis will look at if reviewers include some punctuation in their writing. While ending a sentence without a final period is more common online, it is still grammatically incorrect. Below our bar chart shows the percent of product reviews by rating without any periods, question marks, or exclamation marks.

In this case, five-star reviews are more likely to exclude any sentence-ending punctuation, even if the difference is small. This is likely related to the fact that these reviews are often shorter, as it is common for writers to leave off punctuation for a single or few word comment. This shows that while initially higher reviews seemed to have better quality writing, there are certain measures where they fall short.

***

From our analysis, we showed that five-star reviews have the lowest incidence of spelling errors and most grammar errors. One-star reviews had the most spelling errors, and more negative reviews tended to perform worse across grammar metrics. Still, positive reviews also have errors, as we saw with four-star reviews with apostrophes and five-star reviews with an end of sentence punctuation. Review length could be a factor contributing to the differences in the kinds of errors we see between positive and negative reviews.

Ultimately, these differences may not matter. Perhaps we should not make such a big deal about writing errors. Many writers, scholars, and angry people on the internet are pushing back on strict grammar rules. English is a living language that evolves with use, and the internet is an increasing source of influence.

What matters most is that a review is thoughtful and contributes to an informed consumer decision. A few mistakes in the writing will not detract from a genuinely helpful comment.

***

Note: If you’re a company that wants to work with Priceonomics to turn your data into great stories, learn more about the Priceonomics Data Studio.