“Every individual piece of writing is a function of all writing.”

~ Philosopher Alan Watts

![]()

In Kurt Vonnegut’s classic novel Cat’s Cradle, the character Claire Minton has the most fantastic ability; simply by reading the index of the book, she can deduce almost every biographical detail about the author. From scanning a sample of text in the index, she is able to figure out with near certainty that a main character in the book is gay (and therefore unlikely to marry his girlfriend). Claire Minton knows this because she is a professional indexer of books.

And that’s what computers are today — professional indexers of books.

Give a computer a piece of text from the 1950s, and based on the frequency of just fifteen words, the machine will be able to tell you whether the race of the author is white or black. That’s the claim from two researchers at the University of Chicago, Hoyt Long and Richard So, who deploy complicated algorithms to examine huge bodies of text. They feed the machine thousands of scanned novels-worth of data, which it analyzes for patterns in the language — frequency, presence, absence and combinations of words — and then they test big questions about literary style.

“The machine can always — with greater than a 95 percent accuracy — separate white and black writers,” So says. “That’s how different their language is.”

This is just an example. The group is digging deeper on other questions of race in literature but isn’t ready to share the findings yet. In this case, minority writers represent a tiny fraction of American literature’s canonical text. They hope that by shining a spotlight at unreviewed, unpublished or forgotten authors — now easier to identify with digital tools — or by simply approaching popular texts with different examination techniques, they can shake up conventional views on American literature. Though far from a perfect tool, scholars across the digital humanities are increasingly training big computers on big collections of text to answer and pose new questions about the past.

“We really need to consider rewriting American literary history when we look at things at scale,” So says.

Who Made Who

A culture’s corpus of celebrated literature functions like its Facebook profile. Mob rule curates what to teach future generations and does so with certain biases. It’s not an entirely nefarious scheme. According to Dr. So, people can only process about 200 books. We can only compare a few at a time. So all analysis is reductive. The novel changed our relationship with complicated concepts like superiority or how we relate to the environment. Yet we needed to describe — and communicate — those huge shifts with mere words.

In machine learning, algorithms process reams of data on a particular topic or question. This eventually allows a computer to recognize certain patterns, whether that means spotting tumors, cycles in the weather or a quirk of the stock market. Over the last decade this has given rise to the digital humanities, where professors with large corpuses of text — or any data, really — use computers to develop hard metrics for areas that might be previously seen as more abstract.

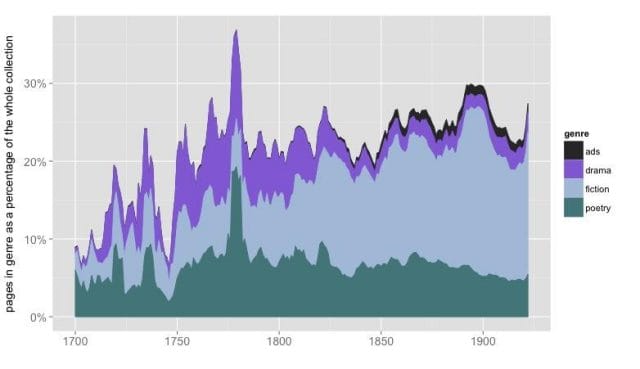

Ted Underwood at the University of Illinois specializes in 19th century literature. He once took on economist Thomas Piketty’s claim that financial descriptions fell from fiction after 1914 due to a devaluation of money after the World Wars — and using machine analysis, seemed to prove the celebrated economist wrong. But Underwood’s work primarily uses computers to understand how genre has evolved over time.

He tests how the machine matches books against thousands of already-scanned books within a genre. Detective stories? Easy. The general cadence of the plot — the crime, the interrogation, the resolution — has stayed fairly consistent since the genre began. He can see, statistically, how words like “whoever” increase in frequency towards the middle of the story. Which makes sense. It’s almost as easy for a computer to recognize sci-fi books, Underwood says, as the concepts of size — bigness and littleness — turn out to be central pillars to early science fiction writing. Computers pick up those patterns across the whole corpus.

Ted Underwood

But there are cases where a genre doesn’t hold as neatly together. “That’s where we start to test the claims we’d like to make about literary history,” Underwood says. The definition of a gothic novel changes enough that Underwood says gothic scholars should examine the real boundaries of what they’re discussing. Underwood doesn’t want to say that scholars who studied gothics are necessarily “wrong”.

“It’s not that simple. Critics may just be seeing a different kind of continuity. It’s not a continuity that’s easy to recognize in the text,” he says. “It appears that the genre is not as stable. Different people may be talking about different things.”

Yet analysis should go far beyond word frequency. Did a writer get reviewed? What year were they published? Where did they live? Those are all critical variables to understanding the context of the work.

“If you’ve got a model with a lot of variables, you can draw pretty concrete boundaries,” he says. “Computers can describe that.”

‘Cause We Never Go out of Style

Courtesy of Richard So and Hoyt Long

One of the trickiest questions in dissecting the arts is that of influence. Where does style come from? Academics evaluate who a writer claims as influences and then look for other tells in their work. But the degree of the influence is largely subjective.

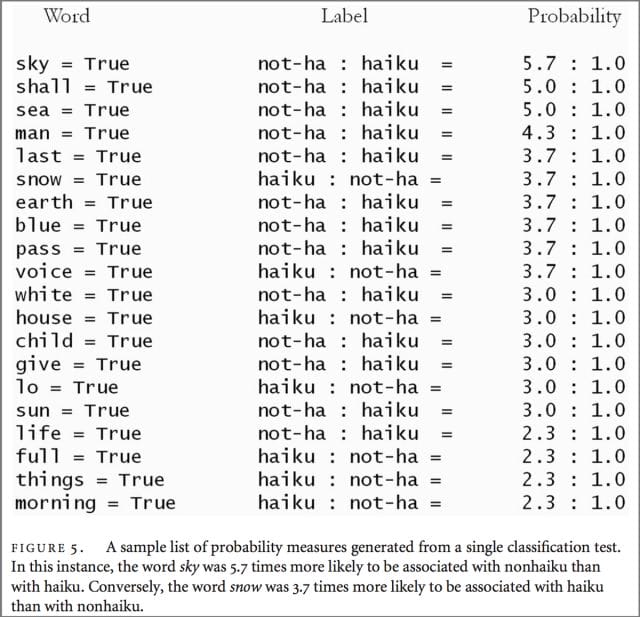

In tandem with their questions about racial patterns for American writers, Long and So have been investigating the influence of haiku in American poetry. Japanese 5-7-5 syllable-line poems dates back centuries in their home country, but didn’t spread to western literature until the early 1900s. Writers in the United States and Europe started reading — and loving — the form and soon were trying it themselves.

Recognizing another 5-7-5 line poem isn’t too tricky. The question Long and So wanted to answer was how haiku permeated into writers’ other poetry. In what new directions did haiku push American poets? How do scholars better spot a poet who is incorporating elements of haiku?

To build the tool, they took a canonical group of haikus, which most scholars recognize as standard, and trained the machine on those as a baseline. The idea was to build an algorithm that was sensitive to haikus rhythm, syllables or pacing and then look for similar ideas in western poetry.

But So says they didn’t need it:

We just needed like 20 or 30 words. It really confounds intuition. You would think it would be hard to build a computational algorithm to search for haikus. It’s actually not true. You can build an incredibly simple algorithm to find haiku with an incredible degree of accuracy. And that was really surprising to us. That resists a consensus among cultural scholars. These texts are not that sophisticated, in some ways. They’re very predictable, even more predictable than spam in some ways.

If it seems like the researchers dulled their search tool a little — from complicated algorithm to counting words — that was deliberate. This is the push and pull between computer and humans. Loosening the search parameters allows them to evaluate a bleeding edge of what was considered haiku influence. Now they could see the close cases and make a determination — a determination that a machine could not.

Mark Algee-Hewitt’s group in Stanford’s English department used machines to examine paragraph structure in 19th century literature. We all know that in most literature, when the writer moves to a new paragraph, the topic of the paragraph will change. That’s English 101.

But Algee-Hewitt says they also found something that surprised them: whether a paragraph had a single or multiple topic was not governed by the paragraphs’ length. One might think that a long paragraph would cover lots of ground. That wasn’t the case. Topic variance within a paragraph has more to do with story genre and setting than the length.

Now they are looking for a pattern by narrative type.

“The truth is that we really don’t know that much about the American novel because there’s so much of it, so much was produced,” says So. “We’re finding that with these tools, we can do more scientific verification of these hypotheses. And frankly we often find that they’re incorrect.”

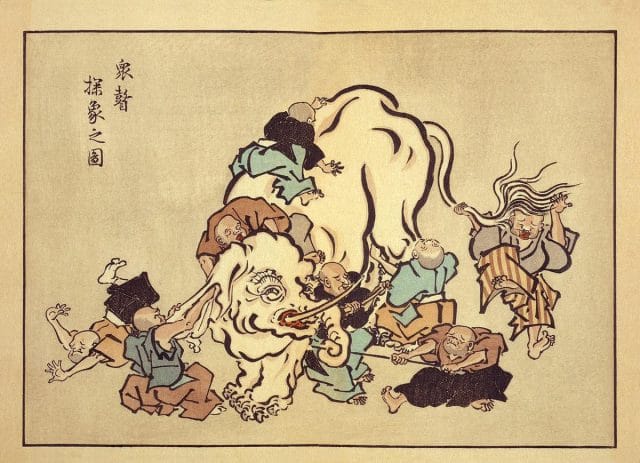

The Blind Men and The Elephant

Wikicommons

But a computer can’t read. In a human sense. Words create sentences, paragraphs, settings, characters, feelings, dreams, empathy and all the intangible bits in between. A computer simply detects, counts and follows the instructions provided by humans. No machine on earth understands Toni Morrison’s Beloved.

At the same time, no human can examine, in any way, 10,000 books at a time. We’re in this funny place where people assess the fundamental unit of literature (the story) while a computer assesses all the units in totality. The disparity — that gap — between what a human can understand and what a machine can understand is one of the root disagreements, among others, in academia when it comes to methodology around deploying computers to ask big questions about history.

Does a computer end up analyzing literature, itself or those who coded the question?

“Often times in the humanities, the idea of building a tool holds some sort of mysterious allure,” says Lauren Klein, a literature professor at Georgia Tech. She uses machine learning techniques in her own research, but notes, “They need to be accompanied by techniques that have been honed over time that are accompanied by literary criticism…A lot of times they’re employed as proof of results: ‘I applied this technique and behold my conclusion’.”

Klein raises the important point: Aren’t we just applying another filter to literature? There was bias when we examined literature through the lens of traditionally taught texts. Computers simply follow the instructions of people. The coding would be reflective of the way the researcher, of some particular demographic, wants to examine history in the first place.

Non-academics have to watch this bias too. Google’s Ngram looks at the frequency of certain words over all the books the company has scanned. Articles tend to cite these graphs when to understand a word’s usage. Yet the word’s frequency in a book doesn’t deliver the full picture of that word’s place in culture. Imagine the difference in usage for “fuck” in literature versus spoken language.

“We’re bound by our data,” Klein says.

With any of these experiments comes the question of sample. Where did you get your text? And is that text representative of the question you’re trying to answer? The reason so many literary scholars are training machine learning on 19th century texts, and not 20th century texts, is simply due to copyright protections on the later works. They’re harder to access.

Today we are creating a corpus of text that — ostensibly — should be technically easier for later generations to analyze. Almost every bit of text has a digital version. These sorts of macro-analyses could have much more of complete picture in the future.

We just need to decide how we let the machines do the reading.

“Mathematically, things can make sense, but in the real world they’re garbage,” So says. “The final result is always up to the human.”

![]()

Our next post is about how a beer brewer at Guinness revolutionized modern statistics. To get notified when we post → join our email list.

This post was written by Caleb Garling. You can follow him on Twitter at @calebgarling.